How to build a message moderation system

Such systems can reduce costs on the manual moderation and speed the moderation up by processing all user messages real-time. This article will discuss the development of an automatic moderation system using machine learning algorithms. We will go through the entire pipeline from the research tasks and the ML algorithms choice to the deployment to production. In addition, we’ll review existing open datasets and tell how to collect the needed data by yourself.

Problem statement

We process active online chats where short messages are generated by dozens of users every minute. The task is to detect all toxic messages and messages containing any obscene phrases within these chats. In terms of machine learning, it is a binary classification problem where each message must be assigned to one of the classes.

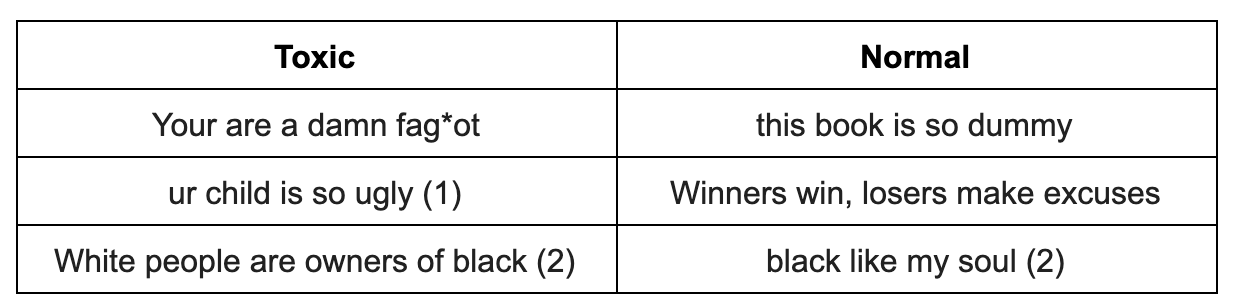

To begin with, let’s define toxic messages. We’ve studied many typical user messages on the Internet and here are several examples already divided into two groups: toxic and normal.

As expected toxic messages often contain obscene words, but it is not required. Some messages, even if they don’t contain any inappropriate words, may be offensive to someone (example 1). Moreover, sometimes toxic and normal messages contain the same words which are used in different contexts (offensive or not, example 2). Such messages should also be distinguished.

Thus, we define toxic messages as messages which contain statements with obscene, offensive expressions or hate speech.

Data

Open data

One of the most popular datasets for message moderation is the dataset provided for the ‘Toxic Comment Classification Challenge’ on Kaggle. However, this dataset contains a lot of incorrect labeling: for instance, messages with obscene words are marked as normal. Because of this, we can’t simply run one of the competition kernels and get an efficient classification algorithm. We need to dive into the data deeply, discover which toxic phrases or scenarios we lack and add them to the training dataset.

There are some scientific publications with relevant datasets (example). Mostly such datasets contain messages collected from Twitter, where one can find lots of toxic short texts. One more reason why data is also frequently collected from Twitter is that Twitter hashtags can be used for search and labeling new toxic messages from users.

Self-collected data

After we had collected the data from the open sources and trained the baseline model it was clear that open data is not enough: we were not satisfied with the model’s result quality. We decided to collect the data on our own using one of the game messengers because they usually contain loads of toxic content.

We have collected about 10k messages and had to label them next. By that time, the baseline classifier had already been trained and was ready to be used for semi-automatic labeling. We applied it to get the probabilities of toxicity for each message and then sorted these probabilities in descending order. Looking at this list of messages, one could see that at the top were those messages which contained obscene and offensive words and normal messages were at the bottom. Thus, part of the data was immediately attributed to the toxic class without any manual labeling. The middle part of the list was labeled manually.

Data augmentation

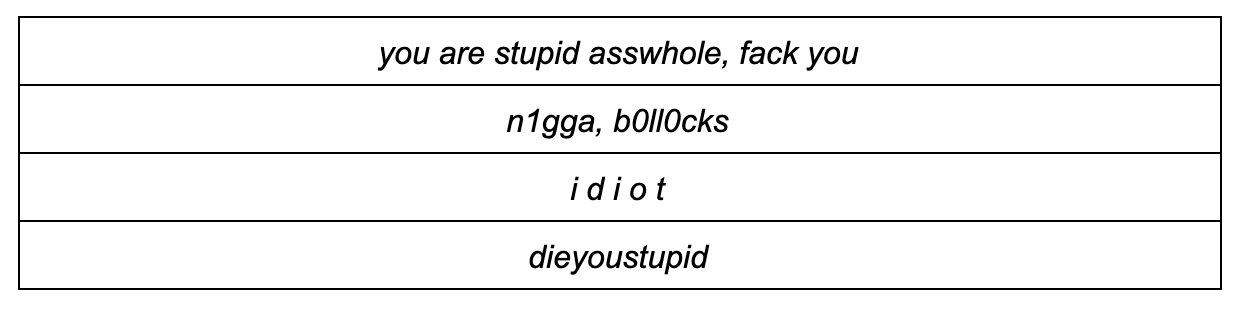

In real datasets may occur messages on which the classifier fails. However, a real person understands their meaning correctly.

It happens because users adapt to the chat limitations quickly and learn to deceive the moderation system so that the algorithm makes a mistake on toxic message. What users do:

- generate typos

- replace alphabetic characters with numbers that are similar in spelling

- insert additional spaces between letters

- remove spaces between words.

In order to train a classifier that is robust to such substitutions, we need to act in the same way as these users: generate similar changes in the messages and add them to the training data. In general, the struggle is inevitable: users will always try to find vulnerabilities and hacks and moderators will go on improving detection algorithms.

Moderation modes

We had to come up with solutions for message processing in two modes:

- online mode — real-time analysis of messages with a high response rate;

- offline mode — not that fast log messages analysis and highlighting toxic dialogs.

In online mode, we preprocess each user message and run it through the model. If the message is toxic, we don’t let it get into the chat interface. But if it is normal, it should be displayed. In this mode, all messages must be processed very quickly: the model must give a response so quickly that the structure of the dialogue between users is not disrupted.

For the offline mode, there is no time limit, and therefore we wanted to implement a model with the highest quality possible.

Online mode

Dictionary search

Whatever model will be chosen we should definitely detect and filter out obscene words. The easiest way to solve this subtask is to make up a dictionary of unacceptable words and expressions and check each message using this dictionary. It is the message preprocessing part which must work very fast, and for that reason, we’ve chosen the Aho-Corasik algorithm which is suitable for quick finding a set of words in a string. Due to this approach, we can quickly identify toxic phrases and block messages without transfer them to the main algorithm. And the following applying the ML algorithm to those messages which have passed the dictionary check allows us to «understand» their meaning and improve the quality of classification.

Baseline model

For the base model, we’ve decided to use the standard approach to the text classification: TF-IDF statistic combined with a classical classification algorithm.

TF-IDF is a statistical measure which allows determining how important a word is to a text in a corpus using two parameters: the word frequencies in each document and the number of the documents containing the word (details here). When we calculate TF-IDF for each word in the message, we get a vector representation of this message.

TF-IDF can be calculated for words in the text as well as for n-grams of words and characters. Such an extension will work better as it will handle frequently encountered phrases and words which were not present in the train data.

from sklearn.feature_extraction.text import TfidfVectorizer

from scipy import sparse

vect_word = TfidfVectorizer(max_features=10000, lowercase=True, analyzer='word',

min_df=8, stop_words=stop_words, ngram_range=(1,3))

vect_char = TfidfVectorizer(max_features=30000, lowercase=True, analyzer='char',

min_df=8, ngram_range=(3,6))

x_vec_word = vect_word.fit_transform(x_train)

x_vec_char = vect_char.fit_transform(x_train)

x_vec = sparse.hstack([x_vec_word, x_vec_char])

After we’ve turned messages into vectors, we can apply any familiar classification method: logistic regression, SVM, random forest, gradient boosting.

For our task, we’ve chosen the logistic regression since it leads to an increase in speed in comparison with other classical ML classifiers.

The resulting algorithm based on TF-IDF and logistic regression works quickly and identifies messages with offensive words and expressions well but does not always understand the meaning of the rest text. For example, non-offensive messages with the words black and feminism might get into the toxic class. With the improved version of the classifier, we wanted to fix this problem and learn to understand the deep meaning of the texts better.

Offline mode

In order to get deeper into the meaning of messages, one can use the following neural network algorithms:

- Embedding (Word2Vec, FastText)

- Neural Networks (CNN, RNN)

- New pretrained models (ELMo, ULMFiT, BERT)

Let’s discuss some of these algorithms and how they can be used.

Word2Vec and FastText

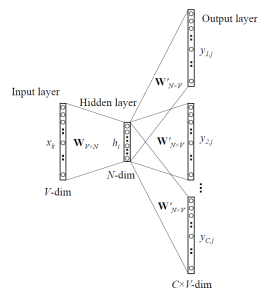

Embedding models are developed to transform words from texts into vector representations. Word2Vec has two types: Skip-gram and CBOW (Continuous Bag of Words). In Skip-gram, the context is predicted by the word, and in CBOW, the opposite: the word is predicted by the context.

Such models are trained on large text corpora and allow to get words vector representations from a hidden layer of a trained neural network. The disadvantage of such architecture is that the model is trained on a limited set of words which were present in the corpus. For all of the words not included in the text corpus at the training stage, there will be no embeddings. Such a situation happens when one uses pretrained models: some words are missing their embeddings and thus a large amount of useful information is lost.

To solve the problem with the words that are not in the dictionary (OOV, out-of-vocabulary) there is an improved embedding model called FastText. Instead of using separate words to train a neural network, FastText breaks words into the n-grams (subwords) and learns them. To get a vector representation of a word, we need to get the vector representations of the word n-grams and add them.

Thus, to obtain feature vectors from messages, we can use pretrained Word2Vec and FastText models. These vector representations can be then classified using classical ML classifiers or a fully connected neural network.

Text classification with CNN

Concerning the tasks of text processing recurrent neural networks (LSTM, GRU) are used more often than other neural network architectures because they cope with sequences better. Convolutional neural networks (CNN) are often used for image processing, but they can also be used in the text classification problem. Let’s consider how this can be implemented.

Each message is represented by a matrix where a word vector representation is written on each line. Convolution is applied to a matrix in a certain way: the convolution filter “slides” across whole rows of the matrix (word vectors), but at the same time captures several words at a time (usually 2–5 words), thus processing words in the context of adjacent words. More details on the process are in the following picture.

Why should we use convolutional networks for text processing when we can use recurrent ones? The fact is that convolutions work much faster. Applying them to the message classification task we can save time on learning.

ELMo

ELMo (Embeddings from Language Models) is a recently introduced embedding model based on a language model. It differs from Word2Vec and FastText models and provides word vectors which have certain advantages:

- The word representation depends on the whole context in which it is used.

- The representation is based on the characters that makes it possible to form reliable representations for out-of-vocabulary words.

ELMo can be used for different NLP tasks. In our framework, ELMo vectors for messages can be, again, then classified by a classic ML classifier or a convolutional or fully connected network.

Pretrained ELMo embeddings are simple enough to use, and an example of usage can be found here.

Implementation features

Flask API

The API prototype was written in Flask, as it is easy to use.

Two Docker images

For deploy, we’ve used two docker images: the base one with all the dependencies installed and the main one for launching the application. This saves build time greatly since the first image is rarely rebuilt and due to this, time is saved during the deploy. Quite a lot of time is spent on building and downloading machine learning frameworks, which is not something necessary at every commit.

Testing

One specific feature of a fairly large number of machine learning algorithms is that even while having high metrics values on a validation dataset, their actual quality in production might be low. Therefore, to test the algorithm in real conditions the whole team used a bot in Slack. This is very convenient because any team member can check how algorithms respond to a specific message. This testing method also allows to immediately explore how the algorithms will work on real data.

A good alternative to Slack bot is to run trial solutions on crowdsourcing services like Yandex Toloka and AWS Mechanical Turk.

Conclusion

We’ve considered several approaches to automatic message moderation and described the features of our implementation. The main observations obtained during our development process:

- Dictionary search and machine learning algorithm based on TF-IDF and logistic regression made it possible to classify messages quickly but not always correctly.

- Algorithms based on neural networks and pretrained embedding models cope with such task better and can determine the toxicity within the meaning of a message.

Finally, we would like to announce that we’ve released an open demo of Poteha Toxic Comment Detection system via Facebook bot. Please try and help us make the system better!

And thank you for reading! Please, ask us questions, leave your comments and stay tuned! Find us at https://potehalabs.com

Further reading:

☞ Python GUI Tutorial - Python GUI Programming Using Tkinter Tutorial

☞ TensorFlow Variables And Placeholders Tutorial With Example

☞ Top Python IDEs for Data Science in 2019

☞ 9 Tips to Trigger a Great Career in Machine Learning

☞ Learning Model Building in Scikit-learn : A Python Machine Learning Library

Suggest:

☞ Machine Learning Zero to Hero - Learn Machine Learning from scratch

☞ Introduction to Machine Learning with TensorFlow.js

☞ Platform for Complete Machine Learning Lifecycle

☞ Deep Learning and Modern Natural Language Processing (NLP)

☞ Python Machine Learning Tutorial (Data Science)

☞ Free resources to learn Machine Learning 🔥 || How to learn Machine Learning for free in 2021