ExpressJS vs Actix-Web. It is exactly what you Think

The goal of this analysis is to try to understand what kind of gains a programmer should expect by using Rust and actix-web rather than Node and Express under typical use, without custom optimizations.

Summary

- Compare performance, stability and running cost of simple microservice-like workload on node/expressJS Vs actix. Setup is limited by 1 CPU Core and using a common pattern of development for each.

- Actix provides 85% running cost saving in a heavily loaded environments, while also providing smaller memory footprint and more runtime safety warranties. It also has an option to scale on CPU with minimum memory expense which unveils more saving opportunities.

- Rust’s types and concurrent logic validation saved time providing reliable working code after it compiled, Node’s solution required some time to debug and fix issues in runtime as well as explicit type validation.

- ExpressJS is fast, minimalist and most popular Node.js web framework. Node.js is a JavaScript runtime built on Chrome’s V8 JavaScript engine. It is among top 10 popular application servers used by high traffic sites according to w3techs research as it’s share is growing.

- Actix is a small, pragmatic, and extremely fast web framework for Rust. According to techempower round 18 report is fastest web application platform in 4 out of 6 categories.

- Code is available on https://github.com/dunnock/actix-node-benchmark

Disclaimer!! Provided research is focusing purely on quality aspects and cost of running apps. It should not be taken as advice to rewrite from JavaScript to Rust and it does not account for cost of such rewrite.

Test scenario and setup

Presume we have microservice which allows its clients to search for tasks where tasks might be assigned to workers. Our simple database model has just two tables WORKER and TASK, and TASK has assignee relation to WORKER:

CREATE TABLE worker (

id SERIAL PRIMARY KEY,

name varchar(255) NOT NULL,

email varchar(255) NULL,

score integer DEFAULT 0

);

CREATE TABLE task (

id SERIAL PRIMARY KEY,

summary varchar(255) NOT NULL,

description text NOT NULL,

assignee_id integer NULL REFERENCES worker,

created TIMESTAMP WITH TIME ZONE DEFAULT CURRENT_TIMESTAMP

);

actix-node-bench-db-create.sql

Let’s compare how application servers behave and check on resource consumption under moderate to high load. Let’s assume that database is not a bottleneck for now (we’ll leave it for different post).

Node.js app is built with express web framework and knex SQL builder library, both are widely popular. Knex claims to use prepared SQL statements under the hood and has embedded pool so it should be comparable to the Rust setup. Main handler:

router.get('/tasks', (req, res, next) => {

let { assignee_name, summary, limit, full } = req.query;

full = full == "true";

if (!!limit && isNaN(limit)) {

return next(createError({

status: 400,

message: "limit query parameter should be a number"

}));

}

db.get_tasks(assignee_name, summary, limit, full)

.then(tasks => res.send(tasks))

.catch(err => next(createError(err)))

})

get_tasks.js

Actix-web service is based on async-pg example. It uses deadpool-postgres as async connection pool and tokio-pg-mapper for SQL type bindings. Main route handler looks similar to the express one except that type checks on parameters performed based on type definition:

#[get("/tasks")]

pub async fn get_tasks(

query: Query<GetTasksQuery>, db_pool: Data<Pool>

) -> Result<HttpResponse, BenchError> {

let tasks = db::get_tasks(db_pool.into_inner(), query.into_inner()).await?;

Ok(HttpResponse::Ok().json(tasks))

}

#[derive(Deserialize)]

pub struct GetTasksQuery {

pub summary: Option<String>,

pub assignee_name: Option<String>,

pub limit: Option<u32>,

pub full: Option<bool>

}

get_tasks.rs

Load test

Testing performed on Ubuntu 18 Xeon E5–2660 with 40 cores_._ Database initialized with randomly generated 100_000 tasks assigned to 1000 workers.

Using wrk we are going to load server with a similar requests over a number of concurrent connections with predefined request. This should suit our case well as our server is not using cache for search.

We’ll need to run multiple tests with measurements of CPU, memory, latency and rps, so automation would pay off. Rust has a rich toolbox for writing CLI automation, including config, structopt and resources monitoring so it was quite easy to automate . Tests which will follow can be executed via:

cargo run --bin benchit --release -- -m -t 30

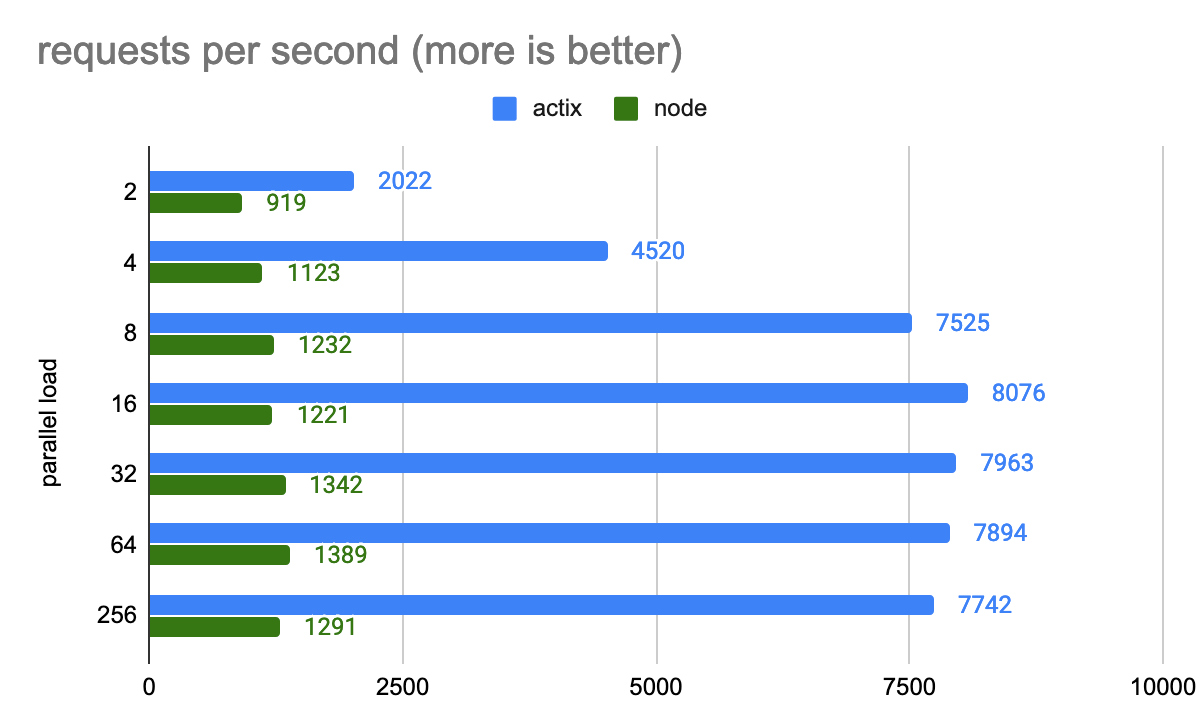

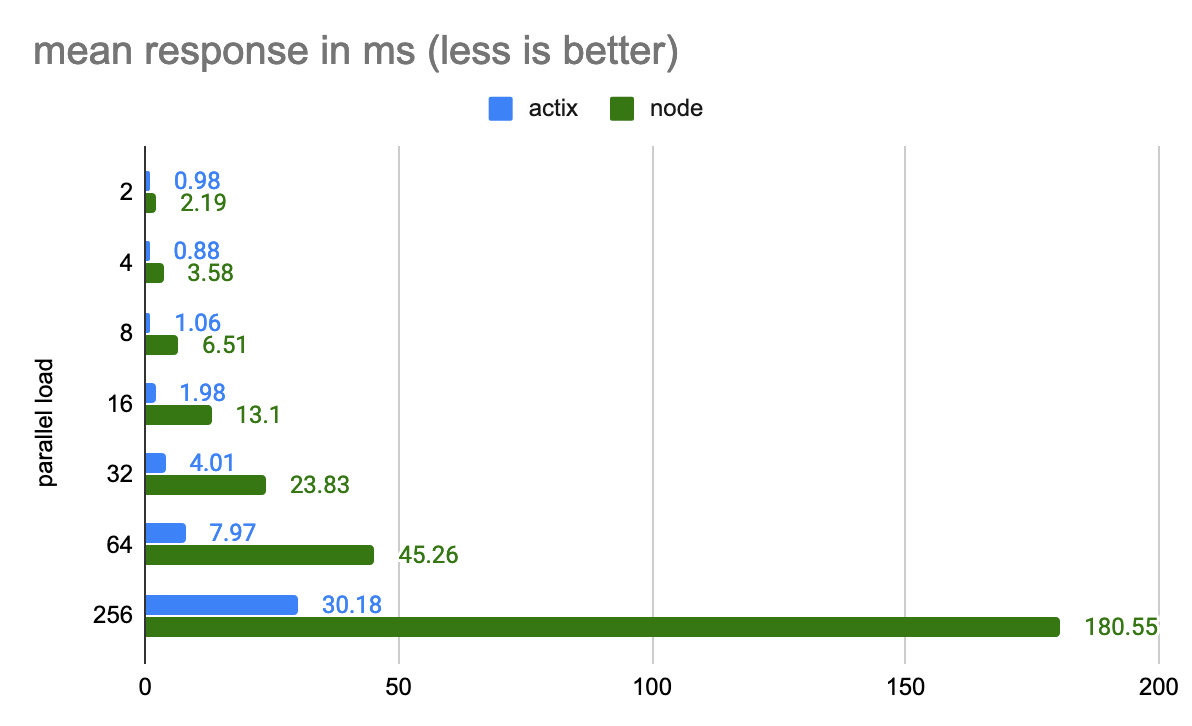

1. Fetch top 10 tasks

Search will return same first 10 records without “fat” description field, each row data is under 500b. It will be light for SQL server and should provide maximum requests per second. Test will be performed with the following command line under the hood:

wrk -c <concurrency> -d 30s "http://<host>:<port>/tasks"

For comparison of actix-web with nodeJS we will limit actix to 1 Core (e.g. 1 worker) initially, which will execute our web processing code. Deadpool will be configured with 15 connections and will handle database communication in parallel.

WORKERS=1 cargo run --release --bin actix-bench

Node.js executes JavaScript in a single thread and uses multiple threads for running asynchronous communication, we will configure knex to use 5…15 connections in the pool and this should match deadpool configuration:

cd node-bench; npm run start

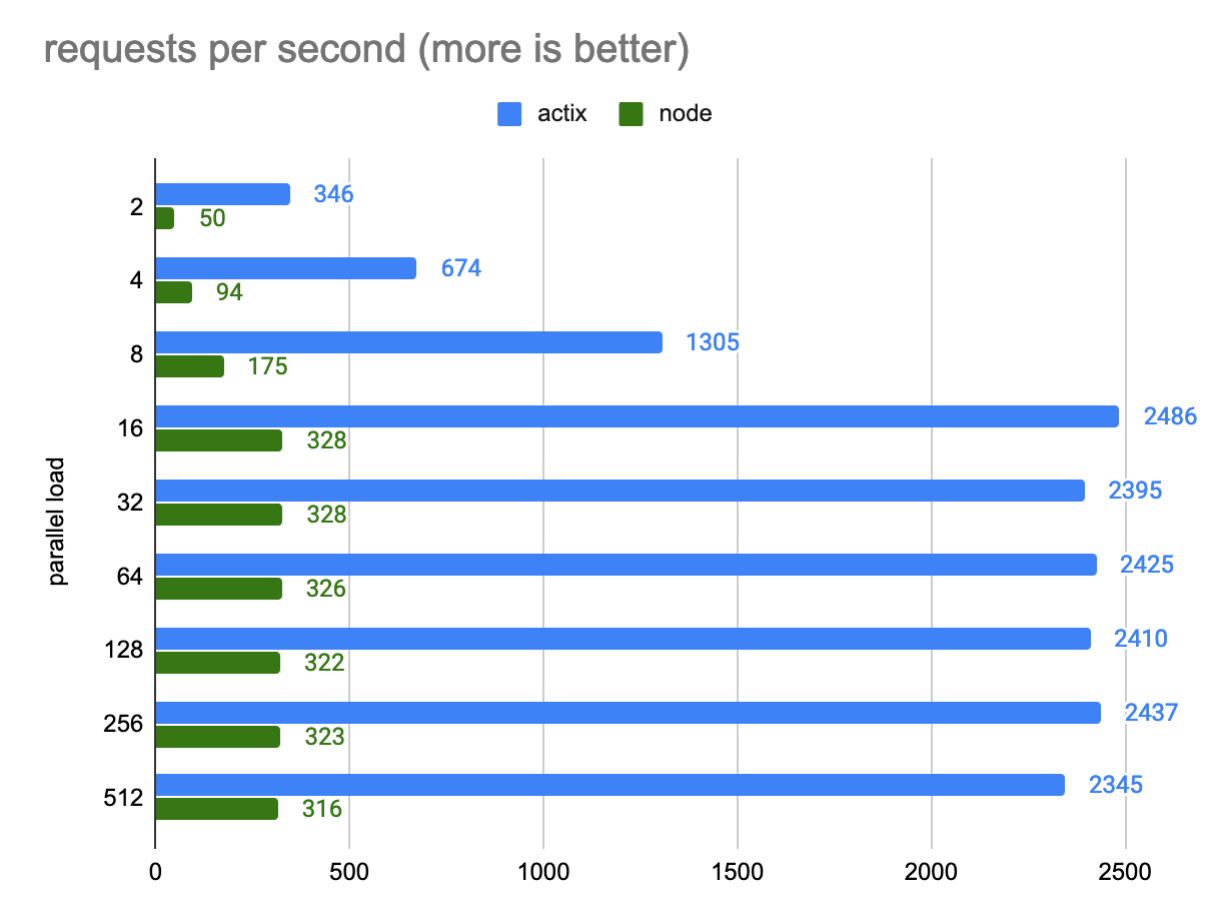

https://gist.github.com/dunnock/b94e37f3342b8d411df5e469fe442786#file-actix-node-benchmark-load-tasks-csv

Looking at the concurrency and rps even with a small load actix provides at least 2 times faster responses than node and under heavy load it is 6x faster. If we look at the database utilization actix seems performing 5 times more efficient. If we look at the response times, node.js for concurrency = 4 provides similar delay (~4ms) as actix with concurrency = 32, which is 8 times better. From the memory footprint point of view it’s even better, on a long run with 1 million requests memory for actix service was around 26MB, while node.js grew up to 104MB, hello gc!

Conclusion: for loaded systems we will need 6 times less CPU Cores with actix than with node.js which is 85% cost saving.

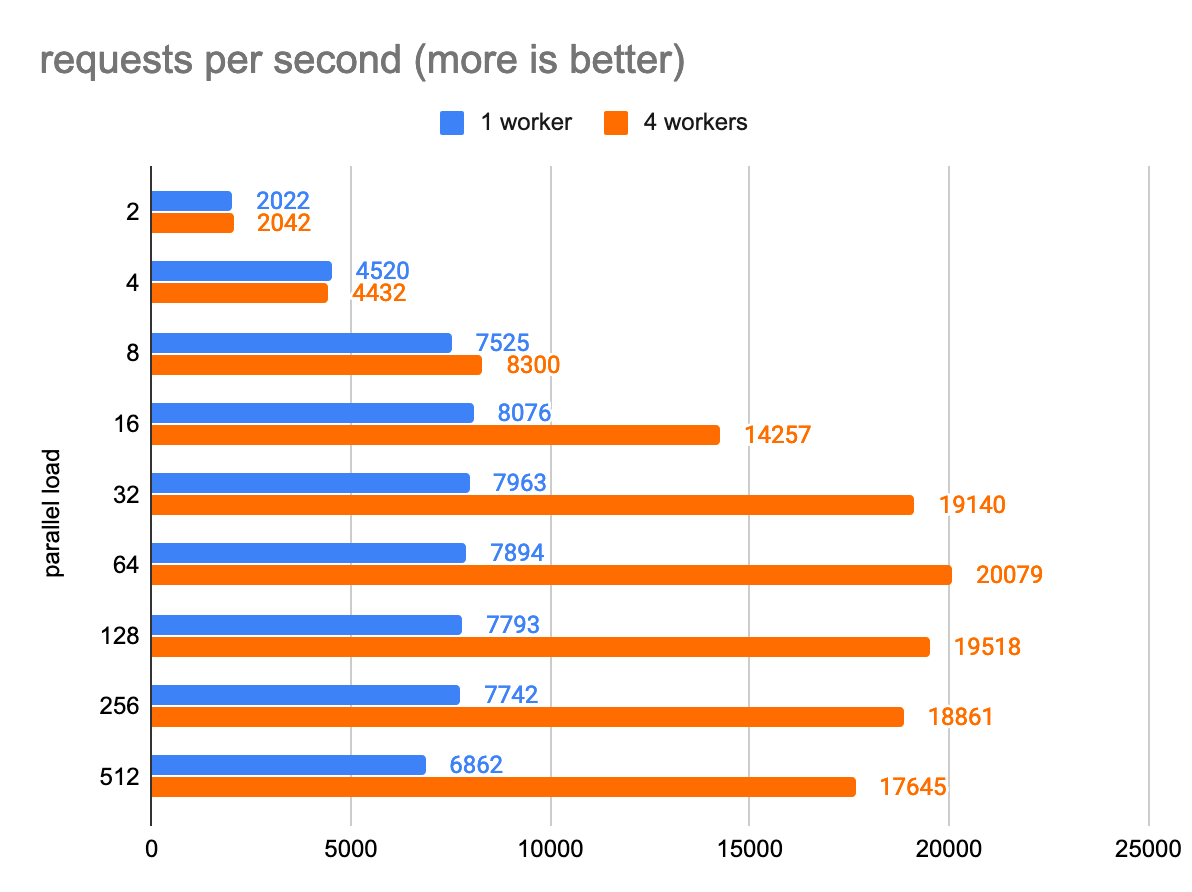

Actix is super efficient when using multiple CPU Cores: fixed set of worker threads (

workers) minimizes context switches. By default actix starts as many worker threads as there are CPU Cores.

Let’s see how well actix can scale on CPU with 4 workers and compare with 1 worker Actix setup:

WORKERS=4 PG_POOL_MAX_SIZE=30 cargo run --release --bin actix-bench

With 4 workers actix provides about 3 times better response time than actix with 1 worker on a heavy load, also providing 3 times more responses per second. Actix scales well on CPU, keeping memory consumption almost at the same level nevertheless of number of CPU’s occupied. Node can also scale on CPU running new process per CPU, which would duplicate memory usage for every new instance.

Actix can fully utilize cheap 2+ core cloud instances, while Node would require more expensive instances with more memory.

2. Search and fetch tasks with description

This search will return 10 matches by indexed varchar summary LIKE .. also fetching ~3–5kb TEXT description field. It definitely will put pressure on SQL server.

wrk -c <concurrency> -d 30s "http://<host>:<port>/tasks?summary=wherever&full=true&limit=10

Test will be heavy on DB hence we will run with 30 connections in the pool.

Quite a surprise! that Node.js is loading SQL server on a level similar to actix, while giving ~7 times worse performance. Probably has something to do with knex.js and the way how it handles prepared statements, though the goal of this post was to compare typical use. This leads to final summary

Actix-web and Rust ecosystem are a good fit for developing efficient web services, requiring ~6 times less CPU power and less memory it would allow significant 75%-95% runtime cost saving with just basic not optimized setup.

The goal of this post was to compare typical use and it does not account for deep fine tuning like node’s GC settings or using low level SQL libraries. There are opportunities to optimize Node.js solution and probably minimize the gap, though it will require significant effort from engineer and from other side same effort might be applied optimizing Rust solution too. Though in the next post I am going to use node-postgres and tokio-postgres directly and see if Node code can get closer to Actix-web.

Implementation notes

I have spent about same time on JavaScript and Rust implementations. With JavaScript most of the time was spent fixing issues in runtime, for instance there was race condition bug: I forgot that JavaScript const does not mean immutable and it appeared knex was mutating query object from all the concurrent requests:

const tasks = knex.from('tasks').innerJoin('workers as assignee', 'assignee.id', 'tasks.assignee_id' );

Code appeared to work nicely in local environment, though lead to huge SQL queries under the load. Debugging took some time but fix was simple.

I spent significantly less time fixing Rust part as it provided type safety warranties for request and response formats out of the box, types validation for database queries and concurrency safety.

It was quite a surprising that Rust and JavaScript code appeared to be of similar complexity, for instance below are implementations of main database handlers:

async function get_tasks(assignee_name, summary, limit, full) {

let query = full ? query_get_tasks_full() : query_get_tasks();

if (!!assignee_name) {

query.where("assignee.name", "LIKE", `%${assignee_name}%`)

}

if (!!summary) {

query.where("summary", "LIKE", `%${summary}%`)

}

query.limit(limit || 10);

let rows = await query;

return rows.map(row => task.fromRow(row));

}

knex-get-tasks.js

pub async fn get_tasks(pool: Arc<Pool>, query: GetTasksQuery) -> Result<Vec<Task>, BenchError> {

let _stmt = query.get_statement();

let client = pool.get().await?;

let stmt = client.prepare_typed(&_stmt, &[Type::VARCHAR, Type::VARCHAR, Type::OID]).await?;

client.query(

&stmt,

&[ &like(query.assignee_name), &like(query.summary), &query.limit.or(Some(10)) ],

).await?

.iter()

.map(|row| Task::from_row_ref(row).map_err(BenchError::from))

.collect()

}

Rust implementation takes more space for types definition, though those also provide very useful checks in runtime. For instance GetTasksQuery type also validates that all supplied GET request query parameters are matching their required type and matching the type required by the database query. JavaScript route handler required 2 explicit checks in the code to provide such safety just in this case.

#[derive(Deserialize)]

pub struct GetTasksQuery {

pub summary: Option<String>,

pub assignee_name: Option<String>,

pub limit: Option<u32>,

pub full: Option<bool>

}

One more thing to note, Rust server handles data initialization as tokio-postgres supports PSQL COPY ... FROM BINARYstatement, this allows to generate and load 100k records in 20 seconds. Texts are generated randomly with with markov chain built from the tale of the ring of Gyges which would emulate regular text load for search index.

Suggest:

☞ State of the Union for Speed Tooling (Chrome Dev Summit 2018)

☞ React vs Vue - I Built the Same App in Both Frameworks

☞ 40+ Online Tools & Resources For Web Developers & Designers

☞ A Guide to Web Scraping with NodeJS

☞ Learn to Setup Linux Ubuntu 18.04 For Web Development Environment