How to create your own Instagram Bot with Node.js

As the news broke about Instagram hitting one billion monthly active users, I couldn’t help but create an account of my own and try to win myself some following.

Given my keen interest in IT, however, I wished to take the opportunity to test some real-world applications of what I had been learning and do so (gain followers, that is) using a bot, instead of putting in all the tedious work.

First thing I did was check for an Instagram API. To no avail, however, as it turned out to be a useless, outdated piece of software.

And although Facebook has been releasing new Instagram API recently, they only support business clients.

But hey, that’s no problem — I thought — I can create one of my own.

And that is precisely what we will learn today.

If you think about it, Instagram’s website is in and of itself the platform’s API. All we need to do is just figure out how to interact with it remotely instead of manually, like regular users.

And when there’s a will there’s a way.

Here is where Puppeteer comes into the picture. The library allows us to create a headless Google Chrome / Chromium instance and control it by using the DevTools protocol.

Setting up the project

You can go ahead and copy the repository.

git clone https://github.com/maciejcieslar/instagrambot.git

Our structure looks like this:

| -- instagrambot/

| | -- .env

| | -- .eslintrc.js

| | -- .gitignore

| | -- README.md

| | -- package-lock.json

| | -- package.json

| | -- src/

| | | -- common/

| | | | -- browser/

| | | | | -- api/

| | | | | | -- authenticate.ts

| | | | | | -- comment-post.ts

| | | | | | -- find-posts.ts

| | | | | | -- follow-post.ts

| | | | | | -- get-following.ts

| | | | | | -- get-post-info.ts

| | | | | | -- get-user-info.ts

| | | | | | -- index.ts

| | | | | | -- like-post.ts

| | | | | | -- unfollow-user.ts

| | | | | -- index.ts

| | | | -- interfaces/

| | | | | -- index.ts

| | | | -- scheduler/

| | | | | -- index.ts

| | | | | -- jobs.ts

| | | | -- scraper/

| | | | | -- scraper.js

| | | | -- utils/

| | | | | -- index.ts

| | | | -- wit/

| | | | | -- index.ts

| | | -- config.ts

| | | -- index.ts

| | -- tsconfig.json

| | -- tslint.json

Browser Interface

With that covered, let’s create our Browser interface which we will use to get rendered pages from Puppeteer.

Our getPage function creates a browser’s page for us, goes to the provided URL and injects our scraper (mentioned later). Also, it waits for our callback to return a promise, resolves it and closes the page.

import * as path from 'path';

import * as puppeteer from 'puppeteer';

import * as api from './api';

import { GetPage, CloseBrowser, CreateBrowser } from 'src/common/interfaces';

const getUrl = (endpoint: string): string => `https://www.instagram.com${endpoint}`;

const createBrowser: CreateBrowser = async () => {

let browser = await puppeteer.launch({

headless: true,

args: ['--lang=en-US,en'],

});

const getPage: GetPage = async function getPage(endpoint, fn) {

let page: puppeteer.Page;

let result;

try {

const url = getUrl(endpoint);

console.log(url);

page = await browser.newPage();

await page.goto(url, { waitUntil: 'load' });

page.on('console', msg => {

const leng = msg.args().length;

for (let i = 0; i < leng; i += 1) {

console.log(`${i}: ${msg.args()[i]}`);

}

});

await page.addScriptTag({

path: path.join(__dirname, '../../../src/common/scraper/scraper.js'),

});

result = await fn(page);

await page.close();

} catch (e) {

if (page) {

await page.close();

}

throw e;

}

return result;

};

const close: CloseBrowser = async function close() {

await browser.close();

browser = null;

};

return {

getPage,

close,

...api,

};

};

export { createBrowser };

browser-index.ts

To be perfectly clear, Puppeteer is on its own a browser interface, we just abstracted some code that would be constantly repeated.

We don’t have to worry about memory leaks caused by pages that we might have left open by accident.

Scraper

Another helpful thing for anything related to web-scraping (which is somewhat what we do here) is creating your own scraper helper. Our scraper will come in handy as we proceed to more advanced scraping.

First, we define some helpers, mostly for setting data attributes. Other than that, we have this Element class which is an abstraction over normal HTMLElement. Also, there is a find function, it gives us a more developer-friendly way of querying elements.

const scraper = ((window, document) => {

const capitalize = str => str.slice(0, 1).toUpperCase() + str.slice(1);

const waitFor = ms => new Promise(resolve => setTimeout(() => {

resolve();

}, ms));

const getFormattedDataName = key => `scraper${capitalize(key)}`;

const getDataAttrName = (key) => {

const reg = /[A-Z]{1}/g;

const name = key.replace(reg, match => `-${match[0].toLowerCase()}`);

return `data-${name}`;

};

// in dataset like { scraperXYZ: 123 }

// in query 'div[data-scraper-x-y-z="123"]';

class Element {

constructor(el) {

if (!(el instanceof HTMLElement)) {

throw new TypeError('Element must be instance of HTMLElement.');

}

this.el = el;

}

text() {

return String(this.el.textContent).trim();

}

get() {

return this.el;

}

html() {

return this.el.innerHTML;

}

dimensions() {

return JSON.parse(JSON.stringify(this.el.getBoundingClientRect()));

}

clone() {

return new Element(this.el.cloneNode(true));

}

scrollIntoView() {

this.el.scrollIntoView(true);

return this.el;

}

setAttr(key, value) {

this.el.setAttribute(key, value);

return this;

}

getAttr(key) {

return this.el.getAttribute(key);

}

getTag() {

return this.el.tagName.toLowerCase();

}

setscraperAttr(key, value) {

const scraperKey = getFormattedDataName(key);

this.el.dataset[scraperKey] = value;

return this;

}

parent() {

return new Element(this.el.parentNode);

}

setClass(className) {

this.el.classList.add(className);

return this;

}

getscraperAttr(key) {

return this.el.dataset[getFormattedDataName(key)];

}

getSelectorByscraperAttr(key) {

const scraperValue = this.getscraperAttr(key);

const scraperKey = getDataAttrName(getFormattedDataName(key));

const tagName = this.getTag();

return `${tagName}[${scraperKey}="${scraperValue}"]`;

}

}

const find = ({ selector, where = () => true, count }) => {

if (count === 0) {

return [];

}

const elements = Array.from(document.querySelectorAll(selector));

let sliceArgs = [0, count || elements.length];

if (count < 0) {

sliceArgs = [count];

}

return elements

.map(el => new Element(el))

.filter(where)

.slice(...sliceArgs);

};

const findOne = ({ selector, where }) => find({ selector, where, count: 1 })[0] || null;

const getDocumentDimensions = () => {

const height = Math.max(

document.documentElement.clientHeight,

document.body.scrollHeight,

document.documentElement.scrollHeight,

document.body.offsetHeight,

document.documentElement.offsetHeight,

);

const width = Math.max(

document.documentElement.clientWidth,

document.body.scrollWidth,

document.documentElement.scrollWidth,

document.body.offsetWidth,

document.documentElement.offsetWidth,

);

return {

height,

width,

};

};

const scrollToPosition = {

top: () => {

window.scrollTo(0, 0);

return true;

},

bottom: () => {

const { height } = getDocumentDimensions();

window.scrollTo(0, height);

return true;

},

};

const scrollPageTimes = async ({ times = 0, direction = 'bottom' }) => {

if (!times || times < 1) {

return true;

}

await new Array(times).fill(0).reduce(async (prev) => {

await prev;

scrollToPosition[direction]();

await waitFor(2000);

}, Promise.resolve());

return true;

};

const findOneWithText = ({ selector, text }) => {

const lowerText = text.toLowerCase();

return findOne({

selector,

where: (el) => {

const textContent = el.text().toLowerCase();

return lowerText === textContent;

},

});

};

return {

Element,

findOneWithText,

scrollPageTimes,

scrollToPosition,

getDocumentDimensions,

find,

findOne,

waitFor,

};

})(window, document);

window.scraper = scraper;

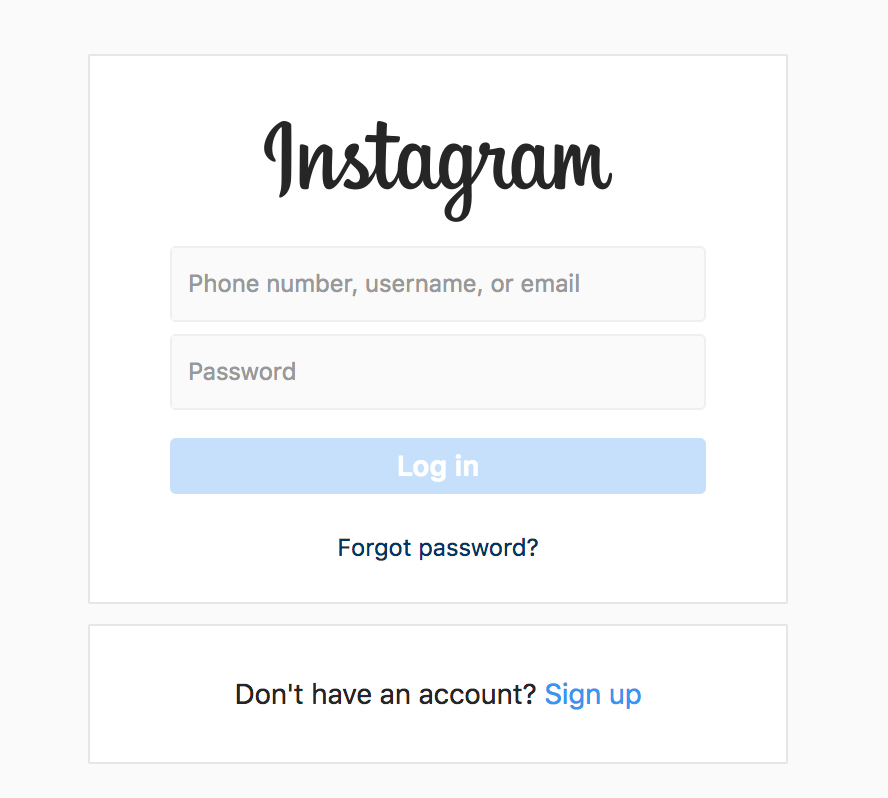

Authentication

Finally, we can create our first function that is actually related to Instagram. When we open the browser for the first time we need to authenticate our user.

First, we wait for the page to open, then we type in our credentials provided in config.ts. There is a 100 ms delay between each character. Then we take the Log in button and if it exists, we click on it.

src/common/browser/api/authenticate.ts

import { Authenticate } from 'src/common/interfaces';

const authenticate: Authenticate = function authenticate({ username, password }) {

return this.getPage('/accounts/login', async page => {

await page.waitForSelector('input[name="username"]');

const usernameInput = await page.$('input[name="username"]');

const passwordInput = await page.$('input[name="password"]');

await usernameInput.type(username, { delay: 100 });

await passwordInput.type(password, { delay: 100 });

const logInButtonSelector = await page.evaluate(() => {

const { scraper } = window as any;

const logInButton = scraper.findOneWithText({

selector: 'button',

text: 'Log in',

});

if (!logInButton) {

return '';

}

return logInButton

.setscraperAttr('logInButton', 'logInButton')

.getSelectorByscraperAttr('logInButton');

});

if (!logInButtonSelector) {

throw new Error('Failed to auth');

}

const logInButton = await page.$(logInButtonSelector);

await logInButton.click();

await page.waitFor(2000);

});

};

export { authenticate };

If you would like to see the magic happen, set in Puppeteer’s launch options headless to false. It will open a browser and follow every action our bot will make.

Now that we are logged in, Instagram will automatically set cookies in our browser, so we don’t have to worry about having to log in ever again.

We can close the page (our interface will take care of it), and move on to creating our first function for finding posts with a #hashtag.

Instagram’s URL for the most recent posts with a #hashtag is https://www.instagram.com/explore/tags/follow4follow.

First 9 posts are always Top posts, meaning that they will probably never return our follow or like as they have thousands of them. Ideally, we should skip them and get only the recent ones.

More posts will load as we scroll down. In one scroll there will appear 12 posts, so we have to calculate how many times do we have to scroll in order to get the expected number.

On the first load, there is 9 top and 12 normal posts. That gives us 21 in total. If we wanted to find 36 posts and omit the first 9, we would have to subtract the first 12 and then divide the rest by 12, so we know how many times we have to scroll.

36(total) — 12(first) = 24 (the missing posts)

24 / 12 = 2 (the times we need to scroll)

Also, we will add one more scroll to the result, because if something took too long to render that would be our safety net.

src/common/browser/api/find-posts.ts

import { FindPosts } from 'src/common/interfaces';

const findPosts: FindPosts = async function findPosts(hashtag, numberOfPosts = 12) {

return this.getPage(`/explore/tags/${hashtag}`, async page => {

if (numberOfPosts > 12) {

await page.evaluate(async posts => {

const { scraper } = window as any;

await scraper.scrollPageTimes({ times: Math.ceil((posts - 12) / 12) + 1 });

}, numberOfPosts);

}

// waitFor render

await page.waitFor(1500);

return page.evaluate(posts => {

const { scraper } = window as any;

return scraper

.find({ selector: 'a[href^="/p/"]', count: posts + 9 })

.slice(9)

.map(el => el.getAttr('href'));

}, numberOfPosts);

});

};

export { findPosts };

We can iterate over returned URLs and execute a given set of actions on each one.

The thing is, we don’t know anything about the post except its URL, but we can find all the necessary information by scraping it.

Getting information about a post

As we see above, there is a lot of useful information on the website regarding the post, such as:

- Is the author followed?

- The follow button selector

- Is the post liked?

- The like button selector

- The author’s username

- The description and the comments

- The comment selector

- The number of likes

But before we go any further…

Adding NLP for our comments

There is this one thing that we should take into consideration and that is what is the purpose of the post?. Is it to show off someone’s new watch or to mourn a departed relative?

Ideally, we would like to know what the post is about. Here’s how we can do that:

Wit.ai is a service from Facebook which let us create an app and teach it to understand sentences.

That’s where NLP comes from, it stands for Natural Language Processing. It is also included in the Messenger API if you would ever like to make a chatbot, for example.

While it may take some time, we can teach our app to understand the description of a post and give us insights.

It is very simple, really, all we have to do is tell it what to look for in a sentence. In our case, the sentence will be a post’s description, that we will send with the node-wit library.

First, you will need to create an account on wit.ai. You can use your GitHub account to log in.

Then you can either create your own app or use someone else’s app. If you would like to use my trained app, here.

Our app takes a message and returns whether it’s happy_description or sad_description and how sure it is about it.

There’s also an emoji library for making our comments more lively.

Now let’s put our token in the config.ts and make a little helper for transforming messages to intents and generating comments based on the intent provided.

import { Wit } from 'node-wit';

import * as _ from 'lodash';

import * as emoji from 'node-emoji';

import { Intent } from 'src/common/interfaces';

import { wit as witConfig, comments as commentsConfig } from 'src/config';

import { getRandomItem } from 'src/common/utils';

const client = new Wit(_.pick(witConfig, ['accessToken']));

const getIntentFromMessage = async (message: string, context: any = {}): Promise<Intent> => {

// wit.ai cant handle messages longer than 280 characters

if (!message || message.length > 280) {

return {

confidence: 0,

value: '',

};

}

const result = await client.message(message, context);

return _.get(result, ['entities', 'intent', '0']);

};

const intentToCategory = {

happy_description: 'happy',

sad_description: 'sad',

};

const emojis = {

happy: [

'smiley',

'smirk',

'pray',

'rocket',

'kissing_closed_eyes',

'sunglasses',

'heart_eyes',

'joy',

'relieved',

'wink',

'innocent',

'smiling_imp',

'sweat_smile',

'blush',

'yum',

'triumph',

],

sad: ['cry', 'sob', 'cold_sweat', 'disappointed', 'worried'],

};

const getRandomEmoji = (type: string) => {

const emojisForType: string[] = emojis[type];

if (!emojisForType) {

return '';

}

const emojiName = getRandomItem<string>(emojisForType);

return emoji.get(emojiName);

};

// get random emojis for given type, for example: happy

const getRandomEmojis = (count: number, type: string) =>

new Array(count)

.fill(type)

.map(getRandomEmoji)

.join('');

const shouldPostComment = (intent: Intent): boolean =>

intent.confidence >= witConfig.expectedConfidence;

const generateComment = (category: string): string => {

// const randomEmojis = getRandomEmojis(getRandomNumber(0, 2), category);

const randomEmojis = '';

return [getRandomItem<string>(commentsConfig[category]), randomEmojis].join(' ');

};

const getMessageBasedOnIntent = (intent: Intent): string => {

const category: string = intentToCategory[intent.value];

if (!category || !shouldPostComment(intent)) {

return '';

}

return generateComment(category);

};

export { client, getIntentFromMessage, getMessageBasedOnIntent, shouldPostComment };

While the code is ready for us to put emoji into our comments, Puppeteer has had some issues lately with typing them. Once the issue is resolved, just uncomment the line and you are good to go.

Now that we can get the post information and it’s intent, using selectors we have previously found on the website, we can get to the elements holding the data.

src/common/browser/api/get-post-info.ts

import * as numeral from 'numeral';

import { GetPostInfo } from 'src/common/interfaces';

import { getIntentFromMessage } from 'src/common/wit';

const getPostInfo: GetPostInfo = async function getPostInfo(page) {

const { likeSelector = '', isLiked = false, unlikeSelector = '' } =

(await page.evaluate(() => {

const { scraper } = window as any;

const likeSpan = scraper.findOne({

selector: 'span',

where: (el) => el.html() === 'Like',

});

if (!likeSpan) {

const unlikeSpan = scraper.findOne({

selector: 'span',

where: (el) => el.html() === 'Unlike',

});

const unlikeSelector = unlikeSpan

.parent()

.setscraperAttr('unlikeHeart', 'unlikeHeart')

.getSelectorByscraperAttr('unlikeHeart');

return {

unlikeSelector,

isLiked: true,

likeSelector: unlikeSelector,

};

}

const likeSelector = likeSpan

.parent()

.setscraperAttr('likeHeart', 'likeHeart')

.getSelectorByscraperAttr('likeHeart');

return {

likeSelector,

isLiked: false,

unlikeSelector: likeSelector,

};

})) || {};

const { followSelector = '', isFollowed = false, unfollowSelector = '' } =

(await page.evaluate(() => {

const { scraper } = window as any;

const followButton = scraper.findOneWithText({

selector: 'button',

text: 'Follow',

});

if (!followButton) {

const unfollowButton = scraper.findOneWithText({

selector: 'button',

text: 'Following',

});

const unfollowSelector = unfollowButton

.setscraperAttr('unfollowButton', 'unfollowButton')

.getSelectorByscraperAttr('unfollowButton');

return {

unfollowSelector,

isFollowed: true,

followSelector: unfollowSelector,

};

}

const followSelector = followButton

.setscraperAttr('followButton', 'followButton')

.getSelectorByscraperAttr('followButton');

return {

followSelector,

isFollowed: false,

unfollowSelector: followSelector,

};

})) || {};

const likes = numeral(

await page.evaluate(() => {

const { scraper } = window as any;

const el = scraper.findOne({ selector: 'a[href$="/liked_by/"] > span' });

if (!el) {

return 0;

}

return el.text();

}),

).value();

const { description = '', comments = [] } = await page.evaluate(() => {

const { scraper } = window as any;

const comments = scraper

.find({ selector: 'div > ul > li > span' })

.map((el) => el.text());

return {

description: comments[0],

comments: comments.slice(1),

};

});

const author = await page.evaluate(() => {

const { scraper } = window as any;

return scraper.findOne({ selector: 'a[title].notranslate' }).text();

});

const commentButtonSelector = await page.evaluate(() => {

const { scraper } = window as any;

return scraper

.findOneWithText({ selector: 'span', text: 'Comment' })

.parent()

.setscraperAttr('comment', 'comment')

.getSelectorByscraperAttr('comment');

});

const commentSelector = 'textarea[autocorrect="off"]';

const postIntent = await getIntentFromMessage(description);

return {

likeSelector,

unlikeSelector,

isLiked,

isFollowed,

followSelector,

unfollowSelector,

likes,

comments,

description,

author,

commentSelector,

commentButtonSelector,

postIntent,

};

};

export { getPostInfo };

Getting information about users

There is only so much we can take from a post. Sometimes we would be interested in the user’s profile.

We can glean a lot of useful information such as:

- The number of posts

- The number of followers

- The number of following

- Is the account followed?

- Bio (the description), but since this is of no use to us, we are not going to scrap it.

src/common/browser/api/get-user-info.ts

import * as numeral from 'numeral';

import { GetUserInfo } from 'src/common/interfaces';

const getUserInfo: GetUserInfo = async function getUserInfo(username) {

return this.getPage(`/${username}`, async (page) => {

const [posts, followers, following] = await page.evaluate(() => {

const { scraper } = window as any;

return scraper

.find({

selector: 'li span',

})

.slice(1)

.map(el => el.text());

});

const isFollowed = await page.evaluate(() => {

const { scraper } = window as any;

const followButton = scraper.findOneWithText({ selector: 'button', text: 'Follow' });

if (followButton) {

return false;

}

return true;

});

return {

isFollowed,

username,

following: numeral(following).value(),

followers: numeral(followers).value(),

posts: numeral(posts).value(),

};

});

};

export { getUserInfo };

Post actions

For now, we need to implement like-post.ts.

We simply check if the post is already liked. If not, we take the like selector and click on it.

src/common/browser/api/like-post.ts

import { LikePost } from 'src/common/interfaces';

const likePost: LikePost = async function likePost(page, post) {

if (post.isLiked) {

return page;

}

await page.click(post.likeSelector);

await page.waitFor(2500);

await page.waitForSelector(post.unlikeSelector);

return page;

};

export { likePost };

The same goes for follow-post.ts.

src/common/browser/api/follow-post.ts

import { FollowPost } from 'src/common/interfaces';

const followPost: FollowPost = async function followPost(page, post) {

if (post.isFollowed) {

return page;

}

await page.click(post.followSelector);

await page.waitFor(1500);

await page.waitForSelector(post.unfollowSelector);

return page;

};

export { followPost };

With comment-post.ts we type the comment in the textarea and press enter.

src/common/browser/api/comment-post.ts

import { CommentPost } from 'src/common/interfaces';

const commentPost: CommentPost = async function commentPost(page, post, message) {

await page.click(post.commentButtonSelector);

await page.waitForSelector(post.commentSelector);

await page.type(post.commentSelector, message, { delay: 200 });

await page.keyboard.press('Enter');

await page.waitFor(2500);

return page;

};

export { commentPost };

Users actions

Our bot also has to be able to unfollow people, since otherwise it might cross the 7500 follows limit.

First, we need to get the URLs of the people whom we are following.

We click on the following button in our profile.

A list should show up with the last 20 users that we have followed. We can then execute unfollow-user.ts for each URL.

src/common/browser/api/get-following.ts

import { GetFollowing } from 'src/common/interfaces';

const getFollowing: GetFollowing = async function getFollowing(username) {

return this.getPage(`/${username}`, async (page) => {

let buttonOfFollowingList = await page.$(`a[href="/${username}/following/"]`);

if (!buttonOfFollowingList) {

const buttonOfFollowingListSelector = await page.evaluate(() => {

const { scraper } = window as any;

const el = scraper.findOne({

selector: 'a',

where: el => el.text().includes('following'),

});

if (!el) {

return '';

}

return el

.setscraperAttr('buttonOfFollowingList', 'buttonOfFollowingList')

.getSelectorByscraperAttr('buttonOfFollowingList');

});

if (buttonOfFollowingListSelector) {

buttonOfFollowingList = await page.$(buttonOfFollowingListSelector);

}

}

await buttonOfFollowingList.click();

await page.waitFor(1000);

const following = await page.evaluate(async () => {

const { scraper } = window as any;

return scraper

.find({

selector: 'a[title].notranslate',

})

.slice(0, 20)

.map(el => el.getAttr('title'));

});

return following;

});

};

export { getFollowing };

Now that we have the URLs, we simply unfollow one user at a time. We click on the unfollow button and then click on the confirmation dialog.

src/common/browser/api/unfollow-user.ts

import { UnFollowUser } from 'src/common/interfaces';

const unfollowUser: UnFollowUser = async function unfollowUser(username) {

return this.getPage(`/${username}`, async (page) => {

const unfollowButtonSelector = await page.evaluate(() => {

const { scraper } = window as any;

let el = scraper.findOneWithText({

selector: 'button',

text: 'Following',

});

if (!el) {

el = scraper.findOne({

selector: 'button._qv64e._t78yp._r9b8f._njrw0',

});

}

if (!el) {

return '';

}

return el

.setscraperAttr('following', 'following')

.getSelectorByscraperAttr('following');

});

if (!unfollowButtonSelector) {

return null;

}

const unfollowButton = await page.$(unfollowButtonSelector);

await unfollowButton.click();

const confirmUnfollowButtonSelector = await page.evaluate(() => {

const { scraper } = window as any;

return scraper

.findOneWithText({ selector: 'button', text: 'Unfollow' })

.setscraperAttr('confirmUnfollowButton', 'confirmUnfollowButton')

.getSelectorByscraperAttr('confirmUnfollowButton');

});

(await page.$(confirmUnfollowButtonSelector)).click();

await page.waitFor(1500);

});

};

export { unfollowUser };

Scheduler

Now that everything is ready, we have to think about how our bot is going to be working.

Obviously, it can’t just mindlessly keep following people all the time, because, as you may have guessed, Instagram would ban him very quickly.

From what I have read and seen during my own tests, there are different limits, depending on the size of the account and on age.

Let’s play it safe for now. Don’t worry, though, as an account grows, the limits get pushed further.

We can create a simple scheduler that will execute registered jobs once an hour, given that hour is in their expected time range.

import * as nodeschedule from 'node-schedule';

import * as moment from 'moment';

import { Browser } from 'src/common/interfaces';

import { jobs, Job } from './jobs';

const getMilisecondsFromMinutes = (minutes: number) => minutes * 60000;

const schedule = (jobs: Job[], browser: Browser) =>

nodeschedule.scheduleJob('0 * * * *', () => {

const hour = moment().hour();

console.log('Executing jobs for', moment().format('dddd, MMMM Do YYYY, h:mm:ss a'));

return jobs.forEach(job => {

if (!job.validateRanges(hour)) {

return null;

}

// so we dont start our jobs at x:00 all the time

const delayedMinutes = Math.floor(Math.random() * 30);

const cb = () => job.execute(browser);

return setTimeout(cb, getMilisecondsFromMinutes(delayedMinutes));

});

});

export { schedule, jobs };

import { Browser } from 'src/common/interfaces';

import { reduceAsync, getRandomItem, probability } from 'src/common/utils';

import { job as jobConfig, auth as authConfig } from 'src/config';

import { getMessageBasedOnIntent } from 'src/common/wit';

class Job {

private ranges: number[][] = [];

public execute(browser: Browser) {

throw new Error('Execute must be provided.');

}

public constructor(ranges: number[][]) {

this.ranges = ranges;

}

public validateRanges(hour: number): boolean {

return Boolean(

this.ranges.find(

(range): boolean => {

const [startHour, endHour] = range;

return hour >= startHour && hour <= endHour;

},

),

);

}

}

class FollowJob extends Job {

public async execute(browser: Browser) {

try {

const hashtag = getRandomItem(jobConfig.hashtags);

const postsUrls = await browser.findPosts(hashtag, jobConfig.numberOfPosts);

await reduceAsync<string, void>(

postsUrls,

async (prev, url) =>

browser.getPage(url, async page => {

try {

const post = await browser.getPostInfo(page);

await browser.likePost(page, post);

console.log('liked');

await browser.followPost(page, post);

console.log('followed');

const message = getMessageBasedOnIntent(post.postIntent);

if (probability(jobConfig.commentProbability) && message) {

await browser.commentPost(page, post, message);

console.log('commented');

}

} catch (e) {

console.log('Failed to like/follow/comment.');

console.log(e);

}

}),

35,

);

console.log('FollowJob executed successfully.');

} catch (e) {

console.log('FollowJob failed to execute.');

console.log(e);

}

}

}

class UnfollowJob extends Job {

public async execute(browser: Browser) {

try {

const following = await browser.getFollowing(authConfig.username);

await reduceAsync<string, void>(

following,

async (result, username) => {

await browser.unfollowUser(username);

},

35,

);

console.log('UnfollowJob executed successfully');

} catch (e) {

console.log('UnfollowJob failed to execute.');

console.log(e);

}

}

}

const jobs = {

UnfollowJob,

FollowJob,

};

export { Job, jobs };

As you might have noticed, we were using a lot of variables coming from a config. There are some values that we provide with proccess.env, which means they are sensitive and we should include them in the .env file. The rest can be changed manually.

const config = {

auth: {

username: process.env.USERNAME || '',

password: process.env.PASSWORD || '',

},

job: {

hashtags: ['like4like', 'follow4follow', 'followforfollow', 'likeforlike'],

numberOfPosts: Number(process.env.NUMBER_OF_POSTS) || 20,

unfollow: Number(process.env.NUMBER_OF_UNFOLLOW) || 20,

commentProbability: 65,

},

wit: {

accessToken: process.env.WIT_TOKEN || '',

expectedConfidence: 0.7,

},

comments: {

happy: [

'Great',

'Wow',

'Damn',

'Nice',

'Cool!',

'Awesome',

'Beautiful',

'Amazing',

'Perfect',

'Wonderful',

],

sad: ['damn', 'im gonna cry', 'nooo', 'why', 'omg', 'oh God'],

},

};

export = config;

Let’s register our jobs with hour ranges that a normal user would be active in and the only thing left will be to run the application.

DISCLAIMER

Due to Instagram’s robots.txt policy, such an application is not allowed to be run. The post was made for educational purposes only.

import 'module-alias/register';

import * as dotenv from 'dotenv';

dotenv.config();

import { createBrowser } from 'src/common/browser';

import { auth as authConfig } from 'src/config';

import { schedule, jobs } from 'src/common/scheduler';

(async () => {

try {

const browser = await createBrowser();

await browser.authenticate(authConfig);

schedule(

[

new jobs.FollowJob([[0, 5], [11, 14], [17, 21], [22, 23]]),

new jobs.UnfollowJob([[6, 10], [13, 18], [21, 24]]),

],

browser,

);

} catch (e) {

console.log(e);

}

})();

npm run start

Thank you very much for reading, hope you liked it!

Suggest:

☞ A Guide to Web Scraping with NodeJS

☞ A Guide to Web Scraping with Node.js

☞ E-Commerce JavaScript Tutorial - Shopping Cart from Scratch

☞ JavaScript Programming Tutorial Full Course for Beginners

☞ Javascript Project Tutorial: Budget App

☞ Web Scraping with Puppeteer in 10 minutes - IMDB Movie Scraping NodeJs